Crypto AI Projects Would Need to Buy Chips Worth Their Entire Market Cap to Meet Ambitions

/arc-photo-coindesk/arc2-prod/public/LXF2COBSKBCNHNRE3WTK2BZ7GE.png)

-

The possibility of text-to-video generation excites the crypto market, and AI tokens rose when OpenAI first unveiled a demo of Sora

-

But to make this mainstream the compute power will be staggering. More server-grade H100 GPUs will be required than Nvidia produces in a year, or what its largest customers run in their data centers collectively.

How many Graphics Processing Units (GPUs) will be required to make text-to-video generation mainstream? Hundreds of thousands – and more than are currently in use by Microsoft {{MSFT}}, Meta {{META}}, and Google {{GOOG}} combined.

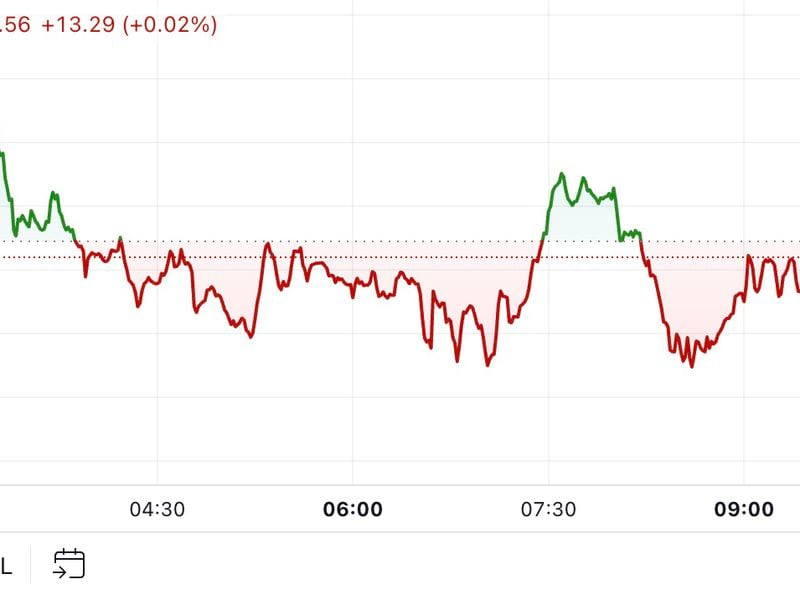

The first demo of OpenAI’s text-to-video generator Sora amazed the world, and this renewed interest in Artificial Intelligence (AI) tokens, with many surging in the aftermath of the demo.

In the weeks that followed, many crypto AI projects emerged, also promising to do text-to-video and text-to-image generation, and the AI token category now has a $25 billion market cap according to CoinGecko data.

Behind the promise of AI-generated videos are armies of Graphics Processing Units (GPUs), the processors from the likes of Nvidia {{NVDA}} and AMD {{AMD}}, which make the AI revolution possible thanks to their ability to compute large volumes of data.

But just how many GPUs will it take to make AI-generated video a mainstream thing? More than major big tech companies had in their arsenal in 2023.

An Army of 720,000 Nvidia H100 GPUs

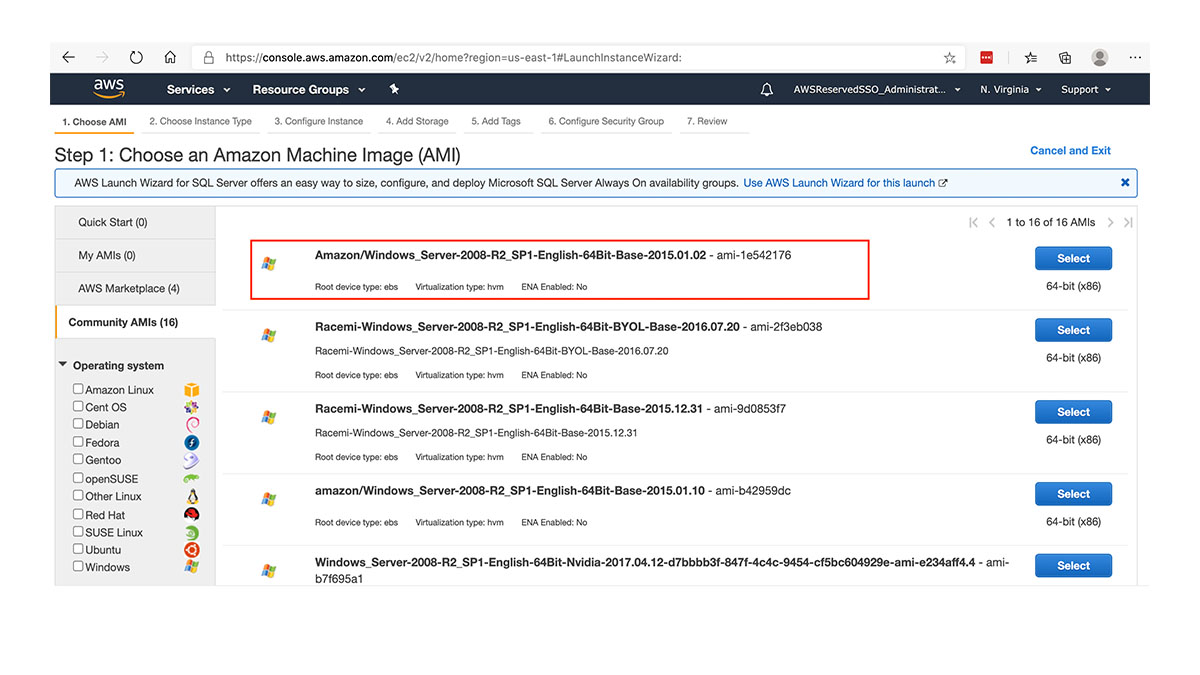

A recent research report by Factorial Funds estimates that 720,000 high-end Nvidia H100 GPUs are required to support the creator community of TikTok and YouTube.

Sora, Factorial Funds writes, requires up to 10,500 powerful GPUs for a month to train, and can generate only about 5 minutes of video per hour per GPU for inference.

:format(jpg)/cloudfront-us-east-1.images.arcpublishing.com/coindesk/XO3NYVMCBNDZBIJ3FBWMVLTG7M.png)

As the chart above demonstrates, training this requires significantly more compute power than GPT4 or still image generation.

With widespread adoption, inference will surpass training in compute usage. This means that as more people and companies start using AI models like Sora to generate videos, the computer power needed to create new videos (inference) will become greater than the power needed to train the AI model initially.

To put things in perspective, Nvidia shipped 550,000 of the H100 GPUs in 2023.

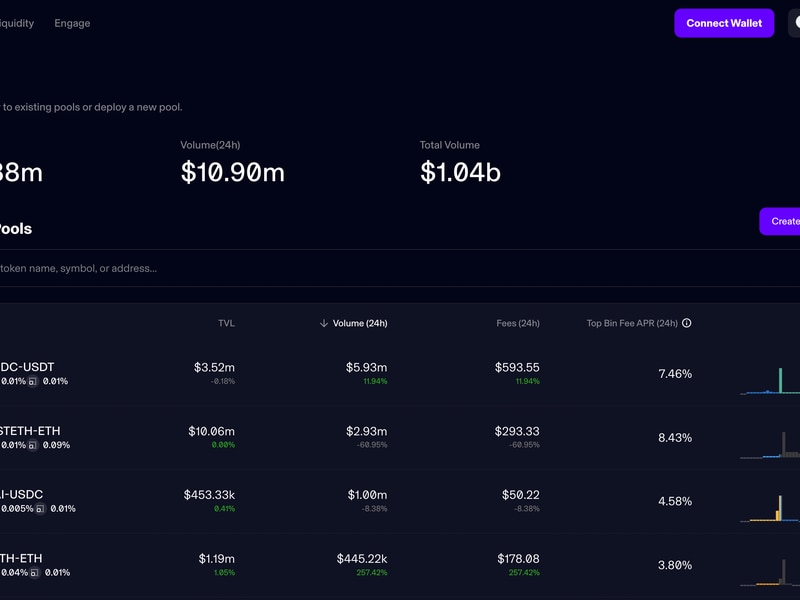

Data from Statista shows that the twelve largest customers using Nvidia’s H100 GPUs collectively have 650,000 of the cards, and the two largest—Meta and Microsoft—have 300,000 between them.

:format(jpg)/cloudfront-us-east-1.images.arcpublishing.com/coindesk/XXIUCEOAARAQNLZHPHAJZUHMCA.png)

Assuming a cost of $30,000 per card, it would take $21.6 billion to bring Sora’s dreams of AI-generated text-to-video mainstream, which is nearly the entire market cap of AI tokens at the moment.

That’s if you can physically acquire all the GPUs to do it.

Nvidia isn’t the only game in town

While Nvidia is synonymous with the AI revolution, it’s important to remember that it’s not the only game in town.

Its perennial chip rival AMD makes competing products, and investors have also handsomely rewarded the company, pushing its stock from the $2 range in the fall of 2012 to over $175 today.

There are also other ways to outsource computing power to GPU farms. Render (RNDR) offers distributed GPU computing, as does Akash Network (AKT). But the majority of the GPUs on these networks are retail-grade gaming GPUs which are significantly less powerful than Nvidia’s server-grade H100 or AMD’s competition.

Regardless, the promise of text-to-video, which Sora and other protocols promise, is going to require a herculean hardware lift. While it’s an intriguing premise and could revolutionize Hollywood’s creative workflow, don’t expect it to become mainstream anytime soon.

We’re going to need more chips.

Edited by Shaurya Malwa.